Bro-ligarchs on AI “Creativity”: But It Goes To Eleven

What Is At Stake If We Outsource Our Skills To Digital Gods That Are Not Conscious Yet Revered As Such

There is a sickness that we have in our society, and that is to remove all pain. It’s an extreme form of helicopter parenting that I detest because it has robbed generations of the right to fail and ultimately prevail in adverse conditions. When you hear the AI Theologists preach that we are on the cusp of transcending all the boredom and tyranny of the mediocrity that ensnares us, you see that the emptiness resulting from a life bereft from the risk of failure has created an even emotional need for fulfillment they claim AI will bestow. We are empty without overcoming risk.

There are two major themes that I see that disturb me because I perceive them as an assault on our desires and our drives for true actualization through achievement. I also see them as an assault on critical skills such as leadership and judgement as well. The first theme is the inevitable rise of AI capable of consciousness, a stepping stone to a superior form of intelligence. The other theme is the inevitable replacement of creative endeavors by AI because it will just be better for us to not struggle. The mantra is it’s only a matter of time that AI will be just as good as Geddy Lee or any of our favorite performers, creators and thinkers, you won’t be able to tell the difference between the genuine and cyber creations of the Bro-ligarch platforms. And if you call AI a tool you just fail to the superior nature of what’s coming.

The Bro-ligarchs, the dudes who practice Zuckerberg’s Facebook mantra of “Break Things, Move Fast”, are on a quest to create Artificial Super Intelligence that will not only break barriers, but will remove those barriers of effort and pain . Like the comedic mockumentary movie This Is Spinal Tap where heavy metal guitarist Nigel Tufnell insists that having 11 on his amplifier signifies being the best because “most other blokes’ amps only go to 10”, the Tech Bros chant that increasing processing power and slapping on various software modules will assuredly deliver AI that is sentient. In fact David Chalmers, leading philosopher of the mind, thinks it’s a 50/50 chance that will occur in 10 years. Never mind that most predictions, in the end, are “it will or will not happen.” Like the weather. I want my time back from reading his essay after dropping that on me at the end.

But sure, just add more processing power, more energy, more training materials, more libraries of images and music and writing, and BOOM, at 11 the ultimate creative and thinking force emerges. Hey, we may have criticized MC Hammer for sampling Rick James, but since OpenAI has done this in multitudes of permutations for billions of dollars, it’s not facsimile, it’s creation. Crank it to 11.

My Personal Motivation

I have a son who will soon start a career where he will be faced with management and co-workers who have been shaped by these sad developments in our advanced culture. They dovetail into a chant that humans need AI in order to manage the complex systems required to remain modern, and as opposed to working on simplification and questioning if perhaps we are the cause of needless complexity that could be simply eliminated if we acted with more agency and courage, we outsource our problems to yet more abstract and remote processes that may solve our pain points. When you look at the phrase “democratizing X”, as in democratizing the creation of art, or lowering the barriers to creating music, you see that the Tech Bro vanguards believe that we should hate the work we put into our hobbies, and therefore ALL problems should be solved by AI.

That’s quite dumb, but worse, it’s exceedingly dangerous. From the creator’s perspective, you have people of low skill and interest in your field of creativity who think everyone should do just what you can do, that there is greater value in everyone effortlessly achieving that than in acquiring skill, because music is just too hard. Or you have written the same words enough in your lifetime and the process of wordsmithing takes too much time, so as long as you have trained an AI model, and since you have really thought about the themes already, just outsource the refinement to AI. Have it derive a near perfect facsimile of your writing while you collect the clicks, views and likes. Because that’s the “smart” thing to do.

From a professional perspective, it creates a near subservience to systems at the expense of productive cognition. It instills a passive copy and paste nature to productivity. And while many professions have proven to be bloated with individuals whose true skill is merely copying homework, we still need those professions as they are critical parts of our economy such as finance, legal practice, real estate development, health care and many others. AI is coming after to copy and paste intelligentsia.

The profession my son wants to enter is one of the next targets of AI. My son hates AI, and whenever he sees me watching the latest on AI video creation and prompting, he snorts and leaves the room. “That stuff is so fake and is crap”. While he’s happy with his choice of his career, he surprised me one day by asking me “Would you hate it if I joined the Coast Guard?” He loves the water, and has worked on a small cruise boat that operates on the Detroit River and Lake St Clair where he’s discovered some of the inlets where rum runners would drop off their contraband. I told him that if it weren’t for the vaccine requirement I would be thrilled if that was what he wanted. The skills and abilities not found in academia he would obtain from that would be immeasurable. Leadership, judgement, communication among many others can’t be found at college.

But he is right in some ways, there is a lot of crap that now is generated effortlessly. There are those with good skills who use AI to do creative things, but they are the creative masters that use AI as part of the entire creative process. I’ve tried my hand at it - it’s super hard.

AI is an impressive tool in a business setting. When used correctly it can synthesize data, rapidly present visualizations of information, taking minutes instead of an hour. A macro on steroids.

But you have to treat it as a tool, not as the assistant that does the heavy lifting for you. I tell my son that while he hates the thought of AI prompting, there will be those of lesser skill who will turn to prompting and present a result faster that will look very polished and have an air of authority. He has to be even better at spotting errors as well as also being able to use the latest tools if his industry adopts them. But should he possess better judgement and a keener eye, he shall be the best to deliver.

But that is only if those characteristics are still valued. The two themes I cited endanger our ability to appreciate that, let alone recognize good qualities of human effort.

Destroying the Coolness of Natural Aristocracy

This is going to sound elitist, but being cool is a form of distinction that is natural aristocracy. Being cool arises from distinction from the crowd, some unique quality that you have obtained from devotion to ideals, a unique style of expression or ability to perform at a level higher than others. This does not diminish the value of others as human beings, but for those who can do things that others find hard, it distinguishes them by their ability. I find it amazing that I have to explain this, but in light of the Suno CEO quoted above, I think that is such a common belief any longer.

When we hear the word aristocracy we think of pedigree, but I am taking this phrase “natural aristocracy” from Thomas Jefferson where he described rewarding those of skill, character, talent and virtue with prestige that beyond what a landed class deserved.

“natural aristocracy among men,” is grounded in superior demonstrations of virtues and intellectual talents (to John Adams, October 28, 1813). This natural aristocracy is fundamentally different from the “artificial aristocracy founded on wealth and birth, without either virtue or talents…

With AI, we are on a quest that gives new meaning to “democratizing the ability to create”. Instead of removing barriers to entry and allowing everyone a chance at attempting a new skill, we are removing the need to go through the hard steps of honing the characteristics to produce things of quality all together. There is no cost to acquiring the ability to create. Particularly when you can copy a section of a song, upload it and with a text prompt ask AI to generate something similar. Tastes aside, the ability to make music has now been watered down to copying and pasting. Generative AI for music uses an efficient sampling process, and the services such as Suno AI, have been trained from items found “publicly” on the internet. Meaning artists who have videos on YouTube have contributed unknowingly to the process that creates competitive music based on samples of their work. Why would Spotify bother to pay you when they can have a facsimile for cheaper?

That’s theft. CTO Mira Murati was unable to cite which sources were used to train OpenAI’s libraries. It’s telling that a C level executive becomes so stymied when asked a simple question about their product line.

What Big Tech is saying is that talent that distinguishes you no longer matters, as the democratization of talent puts everyone on equal footing. Except that you must go to their platforms to experience creative forms of expression. Skills acquired via the traditional methods don’t matter. They are serving humanity by providing skills in a box, and you simply create with no friction.

If you think back to the statement that making music is just too hard, in a sense that says you should not need to practice. It’s a waste of time now that their platform eliminates the requirement. I can’t help but think of the pragmatists saying to friends “Why do you do the same exercises over and over again, it’s going to take too long to improve. Isn’t that the definition of insanity when you repeat the same thing over and over while expecting a different result than what you obtain now with inefficient methods?”

What is lost here is that the journey to obtaining great technique is more important than listening to a song itself. All the repetition, all the time spent so that movement and timing is so ingrained that you no longer need to look at a keyboard while your hands play or observe you hand move up and down the neck of a guitar because you know where each note is located, put several bricks in a foundation of musicality that enables you to play a multitude of songs in different styles. Those days and months become the basis of training your ear to anticipate changes in key or resolution of a melody. That leads to the ability to perform songs at first sitting. Or teach others. Or work well with other musicians. Or take direction from a director or producer.

Analog Kid - When We Didn’t Hate Our Hobbies

I remember when I went with my mother for the first time to watch her practice organ. I had heard and watched her play piano at home, but on this summer day I went with her to the church, and listened from the cool shadows of the nave while she prepped for the upcoming service. I remember this monstrous pipe organ, and my tiny 5’3” mom seated at the keyboard with multiple levels surrounded by all these levers. But what really caught my attention was the fact that my mom could play the foot pedal keyboard with her feet without looking down to find the notes. As a 10 year old that blew my mind. Ask her to play F# and she could feel her way and find it. And quickly.

So should we eliminate pipe organs because AI can reproduce those sounds more easily? Should we care if that happens? Think about it this way: what happens to motivation when you see someone sit down and with a few touches of a computer keyboard, music comes forth, yet you find out that it’s just typing a few things and the rest is done for you by an app? Witnessing mom playing really impressed me because it looked hard to do.

Robbing us of the desire to try hard things denies us the moment that we realize we have mastered something that others have shied away from, or better yet, mastered something you thought no amount of practice would enable you to do. You embraced insanity, and it paid off.

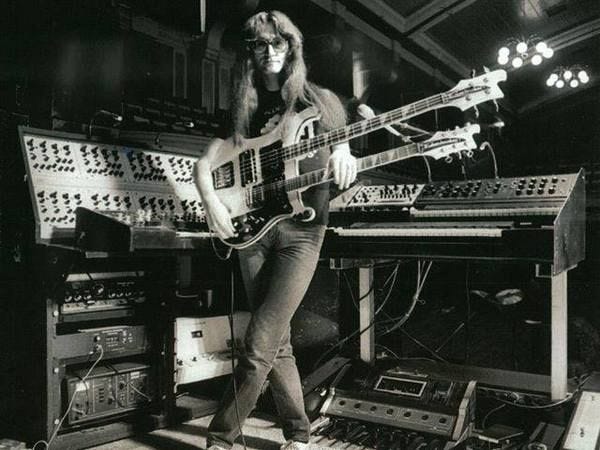

Below is a picture of Geddy Lee, bassist and singer from the progressive rock trio Rush. His gear in that photo was typical for a performance in 1980. But it looks absurdly complicated. In fact it looks like something from the Spinal Tap movie because it’s just so over the top. That gear grew from Geddy’s experimentation and collaboration with his bandmates, and at first was just the pedals that you see at his feet. That is a Moog Taurus bass pedal, which could be configured or “programmed” to produce specific sounds. Geddy has the bass and guitar double neck because for some of the songs that Rush wrote, they were faced with a challenge that backing melody and rhythm guitar were required when the lead guitar player soloed. As Geddy describes it, when Alex Lifeson had to solo during a live performance, there would be a “hole” in the wall of sound that they achieved on their albums. If they overdubbed in the studio, the song would be great but would be impossible to play live. Rush wanted to be true to their live performances.

In a live performance should Geddy accompany a second guitar, they would lose the bass layer. If he played his bass, they would lose the backing rhythm of the guitar chords. The band members, while eager to expand their sound and complexity, did not want to add another player to the group. The bass foot pedals freed up Geddy for other things. As he describes it, his new job in addition to singing and playing or switching to guitar was to be a “footman”.

This led quickly to other synthesizers and the Minimoog. As Geddy describes it, the Minimoog was a challenge, yet something that he could tinker with to “wrap his head around” how to MAKE a new sound. In other words, those iconic notes from Tom Sawyer, Xanadu and other songs were not selected from a preprogrammed library. Those were created with trial and error as Rush wrote their music. Crafted. But in order to do so, Geddy had to learn about wave forms and how that would ultimately impact the sounds he would create. Today this seems so arduous, because we no longer need modular synthesizers because AI has recreated the wheel while eliminating the requirement of long hours of learning how to mix and modulate sound with dials and buttons. You don’t even need to know that different shapes of sound waves produce different results. You no longer have to turn knobs.

This is not a luddite view in the least. What instruments like the Minimoog and others of similar complexity require is for you to deepen your knowledge. To engineer the sounds you wanted, you had to learn. But that knowledge was yours to keep.

Creating AI prompts with Suno requires none of this skill. That may be fine for a 40 second theme song, yet as a substitute for creativity, it falls far short. Yes, AI can conjure all sorts of variations and versions, mix sounds, but it won’t impart the byproduct of knowledge that learning to play an instrument does.

When we destroy that drive to try, we eliminate the multitude of ancillary experience and knowledge that the myopic dimension of AI will not impart. When I read the prognostications of prominent figures in AI who describe how AI will surpass human ability to create, particularly in the field of music, I know immediately that those experts are not musicians. They show no depth of knowledge of the production of music because they only see the end product of a “song” by a famous artist that can be easily mimicked. Those who believe in the inevitable displacement of creativity by AI will describe what I have just written as a sign of desperation and hidden resignation, because I appeal to emotion. That, too, demonstrates their lack of depth regarding the process of musical creation.

But let’s say I am wrong, and AI can create music that sounds as good as accomplished musicians, eliminating our need to struggle and practice. Why should we eliminate the healthy by-products of such struggle? And truthfully, with all the digital libraries available today, why are the most played songs played on Spotify from eras prior to 2015?

Cranking It To Eleven

For music nerds who grew up in the 90s, This Is Spinal Tap was a gem, because it really skewered Heavy Metal. They even poked fun at the double necked guitar that Geddy and other dudes in the 80s played. But in Spinal Tap the bass player had a double neck bass, proving again that the formula for success is cranking EVERYTHING to eleven.

The AI evangelists, who are primarily the Silicon Valley CEOs and their cheerleaders, are just as absurd when it comes to spawning consciousness in AI. They insist that by adding more processing power, sentience will leap onto the stage. AI performs better when it has more parameters at its disposal to process requests. This essentially increases its ability to answer questions with a more eloquent response as well as tackle solving problems of greater complexity.

It is a very impressive display of skill, but it is not intelligence. Intelligence also encompasses the ability to adapt to new stimuli, and currently LLMs need to be programmed - added to - to incorporate new capacity. And AI won’t identify problems to solve on its own, it waits for you to provide the problem to solve. It doesn’t say “Today I think I’ll work on …” It needs you to initiate the process. And AI operates in a very narrow context. Originally chatbots were confined to just the text that you provided. Multimodal mode has expanded the number of inputs, and now voice recognition and visual interpretation, which still translate your vocalizations and identity objects in photos into text. This has great utility, but to hope that cranking all of these facets to 11 creates a being with free will and sentience is a huge stretch. But it is attracting investment dollars.

Even to initiate the conversation with a chatbot, you have to set the stage for yourself. You pick the model that is either designed for faster results, or for longer term tasks, which consumes more electricity. If you pick a text mode yet decide you want to generate an image, you have to manually switch modes. There’s that old school drop down menu selection or toggle button you need to push. For as advanced as the Tech Bros say AI is, I liken it to rubbing a magic bottle and when after asking the genie to grant you wish, the genie tells you that you have to rub the other “Give a me a million dollar bottle because I am the genie that makes you beautiful, riches aren’t my department”.

A new study has been released that relays that the later AI models, while capable of answering more eloquently, are also hallucinating more. The LLMs get stuck recommending software libraries that don’t exist during your joint “vibe” coding sessions. Or They stubbornly insist that the urls to references it used to answer your query are functional despite the 404 error you see in your browser. We seem so willing to overlook these failings that we would not have put up with just a few years ago. That is in part not because AI is in beta, but because it is a burgeoning new form of “thinking”, so we should be expected to give it a pass when considering replacing 1000s in the workplace when it can’t admit that is needs a calculator when it gets math problems wrong, or when it does not accurately relay how it arrived at the answer it just gave you.

Recently OpenAI had to roll back an update to ChatGPT that became too sycophantic. AI did not “learn”, it was installed like any other software release. This new behavior was proven to be beyond absurd with the following answer to the prompt below. It’s self explanatory.

AI powered by LLMs is outstanding at a range of functions that are less flexible than the Bro-ligarchs let on. It's still very impressive, but it is limited to language processing, semantic analysis and vision transformers. When I walk away from my keyboard with the laptop camera switched on, AI doesn’t suddenly decide to scan the books on my shelf, call up information on the title it found and then generate images based on what it’s read.

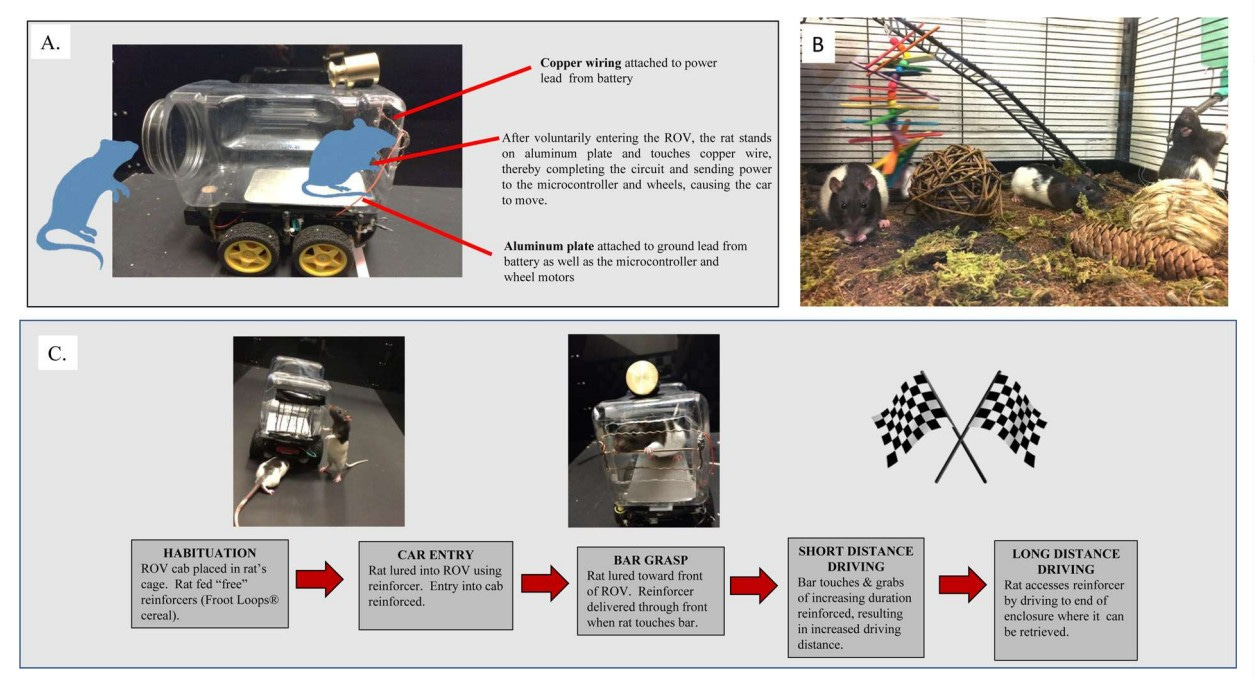

Lab rats demonstrate a greater intelligence and flexibility with respect to adaptability. In a study rats were trained to drive specialized vehicles. The rats were not genetically modified, so their genes were not cranked to 11, they posed the innate ability to adapt to new stimulation and perform tasks outside their given function. In 8 weeks the rats were able to drive their vehicle over 3 feet for a food reward. That didn’t take an Executive Order Nor a few billion from the Department of Energy to construct a nuclear power plant. No specific upgrades to the mice were required, other than consistent reinforcement learning. You can’t train a LLM to build a geospatial model of your home. It has a very narrow function. You have to create that capacity for AI.

In pilot work, two young adult male rats (approximately 65 days old) learned to drive forward a distance of about 110 cms after about a month of training sessions (consisting of at least four 15 min sessions per week). In the current study, animals were trained three times weekly for eight weeks (individual trials were approximately 5 min in this study), during which time the rats’ latency to enter the car, contact the driving bars to move the car, and completion of a full drive to the reward station were recorded

Being Artificially Finnish or How I Aced The Turing Test

A long standing mechanism for judging whether a computer program demonstrates intelligence has been the Turing test. Formulated by Alan Turing, a British mathematician and cryptanalyst who studied methods to decipher German encrypted communications during World War II, the Turing Test is a blind test where the program and human engage in a conversation, and if the human believes that the entity on the other end of the conversation is also human, the program is considered intelligent. By employing a loose definition of intelligence, many erroneously conclude that there is a degree of consciousness behind the conversation on the other side of the screen. The Turing Test has no real qualifying questions or required length time for such a conversation between program and human, it is only sufficient for the human to believe that the other party is also human.

I had my own version of the Turing Test, and I passed it in awesome style with a single word. In 2012 I had the opportunity to take my family to Finland for 2 weeks. We were invited by a Finnish family who lived down the street from us. Vile’s company had sent him to Detroit and our kids played together. They have a beautiful home outside of Helsinki. I love languages, and if I remain in an area long enough, I like to learn as much as possible so I can function while ordering, asking for directions and getting to know people. Just a few words in the native tongue can open conversations. If I can pull this off without being recognized as an American, I’ve won. If they think I’m a native at first, I’ve won big time.

The Finns have a greeting “Moi”. It’s spoken very tersely. Almost like “Hey”. And while I got to the point where I could order pizza, it was only with two or three sentences. But language is also about attitude, demeanor and a bunch of other factors that we tend to forget are also responsible for communicating as well.

On our last day, my son, who was 5 at the time, wanted a comic book for the flight back, so we went back to a bookstore in Helsinki where they had a great section for kids. With his comic book in hand, we went to pay.

“Moi,” I said, putting the comic down. I slapped my credit card down as though I were paying ofr beer.. The lady at the register smiled and then started speaking to me, a rapid string of words with maybe or two I thought I recognized. Then she looked down at my card, read my name, then back at me, laughing.

“Sorry - I thought you were Finnish.” It was funny, I fooled her. And with one word I sounded convincingly native enough to pass as a dad from Finland getting a comic book for his son. One word. I was proud of myself. I passed a Turing Test.

That says a lot about human behavior, and it says a lot about the validity of the Turing Test. A sales clerk mistook me for a native, and had she studied me long enough instead of remaining focused on her job, I bet she would have figured out I was an American. With AI we just have text, not many other clues, and it is no wonder that a loquacious answer to our queries doesn’t gradually place us in a different context where we are less aware that we are not speaking with anyone. This gradual approximation affects our judgement in ways that are subtle, and I think this leads us to ascribing characteristics that are manufactured more from our minds than from the mere words on the screen.

In the rat study I cited previously I ran across this passage, which imparts something that we are losing if we allow AI to take such primacy in leading our conversations and thinking.

Across an animal’s lifespan, the accumulation of informative experiences, whether they are driving skills or another skill set, likely contribute to emotional resilience, providing buffers against subsequent neural threats and challenges

We are more willing to accept that observation about another species of animal than we are when wondering about the deleterious effect of long hours spent with an eloquent software application. We certainly ignore that concern regarding social media. An application that is not only being pushed as a superior substitute for human to human instruction and interaction, but as a substitute for the hard work required to gain the confidence from skill acquisition. In fact, you won’t be required to do a thing, it will just create for you as you sit back and enjoy.

You have to ask yourself if all those who bemoan the death of creativity due to the inevitable rise of AI are not giving in too easily. For one, they may lack the skill to judge quality in those particular subject areas of creative endeavors. Think of it this way: if you have only heard organ music in your earbuds but not in a live venue of a church or cathedral where the building vibrates with that majestic sound, you are missing crucial elements of experience. The Kurzweil fanboys can crank AI to 11 all they want, but AI is not the only gig in town. If we relent and let AI do the copying and pasting for us, we are barely a passive audience.

Why let your best performances go to waste?

Great post! Yes, the Turing Test should probably just be called the Human Deception test.

The current generation never knew the peace of mind that existed prior to social media.

The next generation will never know what it was like to have a mind. We lose the things we don't use.