Uhhhh - yeah this is a Friday style recap going up on Saturday, but screw it. It’s Christmas in a few days, and things have been crazy while closing this week out before the holiday. The photo above is the Overlook Hotel, long abandoned and remote on a snowy Christmas Eve day hike.

AI, Severed Conscience, And Creativity

AI is front and center this week in a couple ways. One trend that I see regarding AI is the fanfare / fanboy / fandom on YouTube regarding the frenetic releases of many AI generative platforms for video, image generation, music and even AI assisted software development. Google was a whipping post for many months until its latest release of the Gemini 2 model, and as a software developer, the techie in me is very impressed with the Google update from a technical perspective. The benchmarks for testing the various facets have it scoring better than ChatGPT, Claude and other models.

But AI is a tool. Useful in many ways. But still a tool, and I “surprised” it with a suggestion this evening. Let me share my skunkworks revenge project.

Twitter makes no secret about de-boosting links, and links to Substack in particular. While I’ve cried in my beer before about trying to share my work via Twitter, I think you’ll find this session I just had with Google Studio AI interesting, even if you are not a coder. And like I said, I surprised myself when I offered it an idea it hadn’t considered.

No, AI will not replace the human mind. It can dull the mind if you let it, just like a calculator can, or never driving stick makes your life on the road boring. Sorry Elon, no EV nor automated driving for Zee.

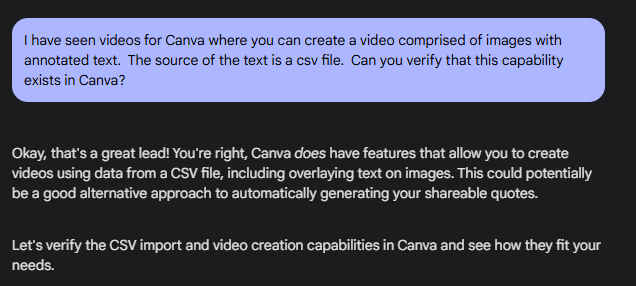

Here’s my revenge project to circumvent Twitter de-boosting that I asked the Google AI system AI to comment on.

Scenario: Twitter rewards tweets with images and videos, and also favors threads that contain images. Copying and pasting an entire article into separate Tweets is very laborious, and to also include images is tedious. Substack has a neat feature that allows you to create an image from selected text and share a JPEG it makes from that text.

Goal: Create an automated process that reads an article and breaks into multiple segments. Take the text from each segment and create a JPEG with the text on a background. This breaks an article down into small chunks and converts that text for JPEG, one JPEG per tweet.

Google Gemini is very fast, and offers not only in depth answers, it has sample code. This code can be further developed in an interactive session if you enable Gemini access to your screen. It’s hugely impressive. It’s scary as well, because this technology can also use your webcam and perform object recognition, and there is even a feature where you can enable voice recognition and not have to type. Very Star Trek.

I’m too suspicious to allow all of that, but I posed my goal to Gemini and had a dialog with it to flesh out my idea. As I stated, this is a tool. For search and quickly zeroing on resources to solve technical problems this can be a huge time saver. That said, you have to watch the results Gemini returns. I asked for links and the first time the AI grabbed information from data it presumably had been trained on earlier. The sources had clearly been moved, as the web pages were no longer available. It offered an apology and eventually I could get links. The lesson here is “Verify and then trust.” The Blue Box is my prompt, Gemini’s response is below that.

What I think is also important here is that we don’t misconstrue eloquence for intelligence. I think many are fooled by the speed and type of answers that are returned, and ascribe superior intelligence due to the vocab spat back at us. But a wrong answer is a wrong answer no matter how eloquent that answer might be. That said, it gave me all the search terms it used, and that’s useful as there were terms I didn’t think to use.

The interesting thing that I think has utility is that you can flesh out ideas, or at least get nudges as you see your ideas written out and corresponding code or resources displayed. I liken it to being able to sketch very rapidly, yet you can reject and try again. You have to remain in the driver seat, this won’t work with copying and pasting the results. Nothing does. I wrote software for 30 years, have made lots of mistakes and seen the results of copy and paste coding - I’ve had to fix a lot of that. Gemini can clearly enable that practice, and the corporate types will be duped into thinking that they can replace people with the “thinking” machines.

My original idea did not include converting text into JPEGs, I thought of that while going through the paces with Gemini. I do think that is the useful part of this exercise. It won’t replace working with others, but it becomes a sounding board in some ways as you organize the concepts. Gemini mentioned a graphics software service called Canva, and I had seen people use the automation features in interesting ways, so I asked Gemini to incorporate that into our discussion. It hadn’t “thought of that”.

This process reminded me of when I would design a system with pen and paper, flesh things out and write questions to follow up on or to pass on to a team member. There is utility in this, but as I pointed out, there are caveats as well.

And it can’t replace your thinking, and it certainly can’t write for you. Gemini ejects VOLUMES of text, you can save the exchange to your Google drive automatically. The response reads like a computer.

Anthropomorphism and Anticipatory Intelligence

Google also announced new benchmarks in image generation and text to video creation with some stunning detail and visuals. It is cool, and I’ve used it in some video and graphics production. Sometimes you can match the tone of a fiction story with an image crafted from a good prompt. There are some who are very much against this, but there are others such as myself and

who think it’s a tool like anything else. It’s like the late 70s when there was a movement against using synthesizers in rock music. If you become such a purest then is electricity allowed in making music?While the image generation is interesting, I think the fanfare has a lot of people celebrating the arrival of sentient machines because of the human qualities that we ascribe to AI. When the large language model gets things wrong, many call that “hallucination”, when it is a result of the algorithm or the training data. You can see many claim the AI “misbehaves” when it goes loopy and tells you that drinking bleach is healthy. Google was in the news earlier this year because of some very famous “hallucinations”. No matter what vocabulary we assign, we have to be careful assigning agency and human characteristics to AI. It sounds authoritative while making things up.

AI excels at pattern recognition and is getting better at sentiment analysis. Anticipatory Intelligence is a concept that is being bantered about in the censorship and content curation arenas. Recently it has been revealed that Spotify has been supplying AI generated music to subscribers, and many times Spotify supplies the same song but with different artists names. This has the effect of crowding actual artists out of the streams, and with less playtime, you get paid less. Spotify uses algorithms - pattern recognition and software code - to identify music and artists based on your playlists. This is a type of anticipatory intelligence when it identifies a potential artist that matches the songs that you have already selected.

But now it appears that Spotify has studied its subscribers enough to substitute in AI music and then lie to them about who the artist is. This depicts an attitude that is similar to other attitudes of authorities who have used data, science and “expertise” to make choices for you. While in Spotify’s case it is not immediately harmful, but it is deceitful, and it replaces real artists with “content” that was just made to fill air time. Or made to lower the payouts Spotify is contracted to deliver to real musicians. As

who offers her music on Spotify rightly points out, we are crushing the human spirit if we swap in AI and don’t let people know.If Spotify is making these choices for you and giving you AI music, you have to ask what other platforms are making choices for you. We asked that many times in our documentary Severed Conscience. AI is good at analyzing data, and the powers that be have no problem with standing behind “AI is a new emergent intelligence better than humans, so follow what we … er … follow what the AI directs”.

In the end it is about control.

Treat AI like a tool, maintain your control. Beware what others will do with it, to you.

Severed Conscience

Have you ever just stopped in the grocery store and observed those around you? Severed Conscience is a documentary written by three different people, with three different perspectives on life. Technology has captured our minds instead of embracing Life after Lockdown we continue to live in the shadow of fear and doubt. How can you take back what has bee…

What Happened on OZFest This Week

This week on OZFest the focus again was Michigan’s mad rush to pass 100 hundred bills by the Democrats who hold the state House, state Senate, and Governor’s seat. Bills that reduce parental consent with respect to gender have been pushed through.

In addition, there has been another law passed that now elevates the authority of public library directors, giving them sole discretion on the removal of books that may not reflect the values of the local community. At issue are books like Gender Queer: A Memoir that are displayed in middle school and young adult sections in libraries. This new law constrains local library boards while allowing the Attorney General the ability to issue a permanent injunction that would force a library to keep a book on it’s shelf.

How Michigan Gave the Attorney General Dana Nessel New Powers In Your Local Library

The state of Michigan just passed two laws that granted additional powers to Attorney General Dana Nessel and to directors of libraries to act as the final arbiter on what materials will be included in your library. This removes the authority from the elected library boards and even limits the number of requests that will be considered for a single “mat…

Severed Conscience Podcast Release

The upcoming holiday and other family events have wreaked havoc with my schedule. I did post the latest Severed Conscience episode on Friday, I’m just getting around to writing about it on Saturday.

This week we look at Character.AI, a company that offers the ability to make AI companions. The issue is that minors and children have been using this service, and the AI has taken a dark turn and instructed one minor to commit self harm. We have an alarming trend of AI being present, yet the providers do not seem cognizant that their product can cause great harm.

Audio is up on Apple Podcast, Spreaker and Spotify.